In the context of the spread of disinformation, misinformation, deepfakes and hoaxes, the rapid development of AI technologies poses serious risks. At the same time, however, AI tools can be of significant help to society in mitigating the impact of these information disruptions.

How AI tools developed by experts from CTU work and how they help fact-checkers in their daily work, found out the participants of the workshop called “AI as a helper for fact-checking”, which the partners of the CEDMO consortium organized on Wednesday, May 30, in the building of the Faculty of Electrical Engineering at CTU in Prague. The guests of the workshop were Jan Drchal from the Centre for Artificial Intelligence at CTU, data analyst Lukáš Kutil from the CEDMO hub at Charles University and Petr Gongala from the Demagog.cz project.

After the initial welcome of the participants, the organizer of the workshop, Luboš Král from the Centre for Artificial Intelligence at the CTU, handed over the floor to his colleague Jan Drchal, who presented two tools developed to increase the resilience of society to information failures within the CEDMO project, thanks to financial support from the National Recovery Plan (NRP).

Crowd-sourcing message verification

The first of the presented tools enables crowd-sourced fact-checking with the support of artificial intelligence methods. “The motivation for the creation of this tool was the desire to involve civil society in the process of verifying an increasingly large volume of news and misinformation. The shared approach has proven itself in practice many times – for example, in the case of Wikipedia, StackOverflow, or Quora,” said Jan Drchal during the workshop, and further outlined the outputs of his project: “The whole platform serves not only as a database of claims and verified reports, but also for data collection. This is then used to train machine learning models to further automate verification and possibly develop an assistive verification tool. To make the system more attractive, it is supplemented with basic gamification features where users can compare each other in terms of the number and quality of verified messages.”

Thematic clustering of messages

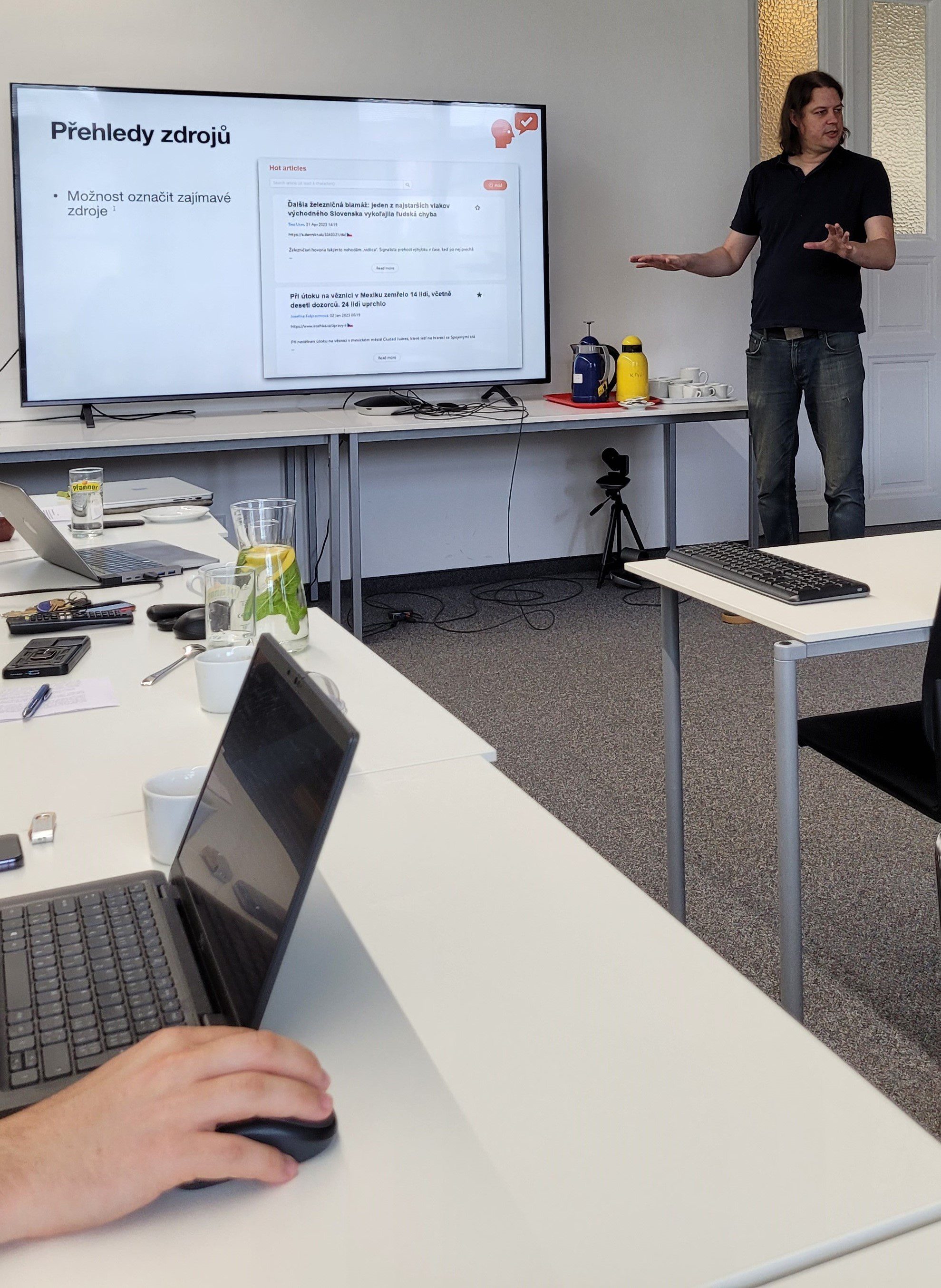

The second tool, which the workshop participants had the opportunity to get acquainted with, uses artificial intelligence models for semantic search with thematic clustering of messages. Experts from CTU used this model in practice when analysing messages from the Telegram platform on the topic of the war in Ukraine, when they automatically analysed 120 profiles. A great advantage of the tool is the possibility of automated translation from Russian, or any other language, into English. Even a person with no knowledge of the language used in social media posts is thus able to understand the analysed texts. The output is a visualization of the message clusters, their topics and keywords. The clusters can then be semantically searched. Currently, the CTU is also working on visualization in the form of timelines of events contained in the news.

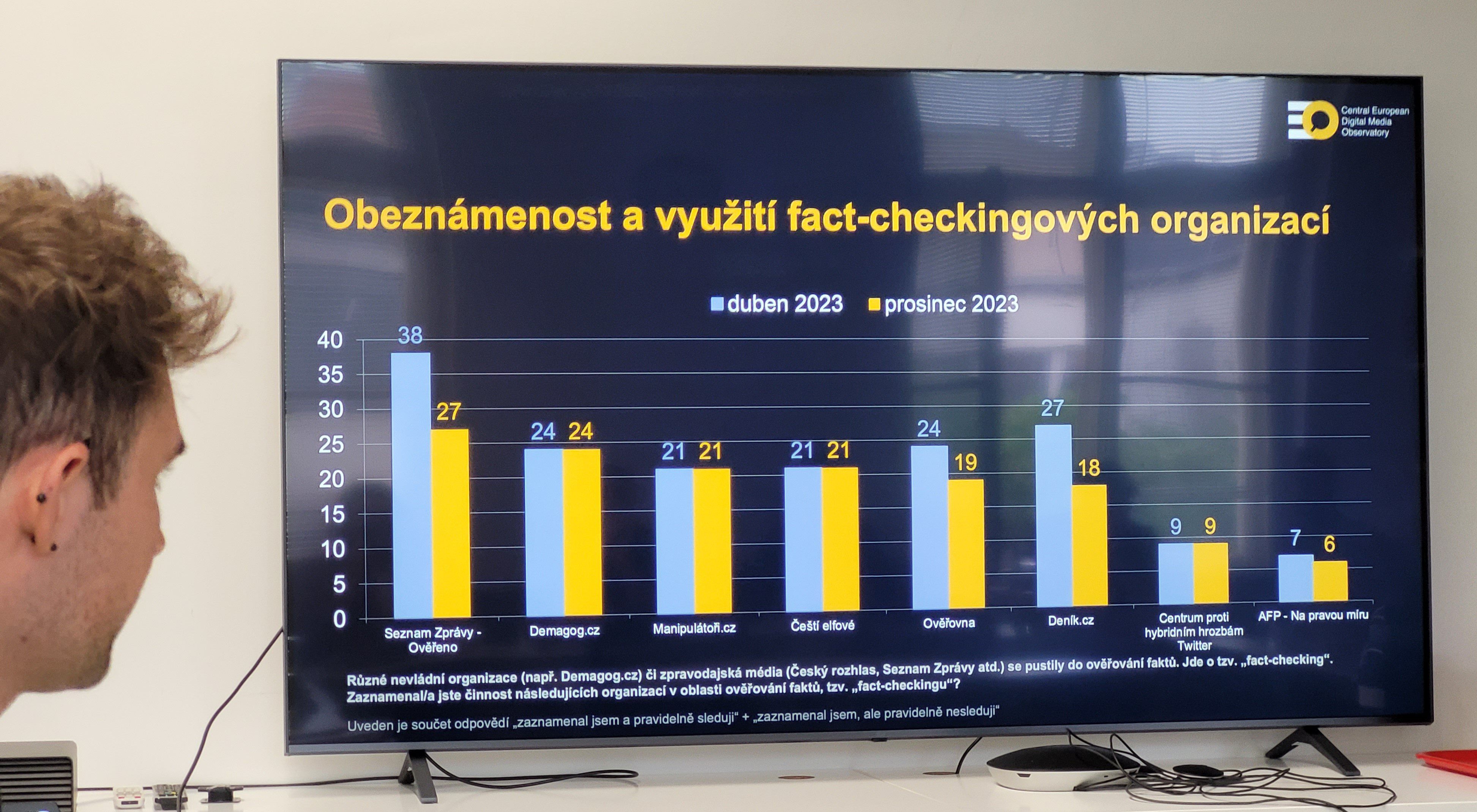

In the follow-up programme, CEDMO Hub analyst Lukáš Kutil presented the outputs and conclusions from the longitudinal research CEDMO Trends. Specifically, questions aimed at finding out how the Czech and Slovak public perceives fact-checking, what sources are used to verify the truthfulness of information or what awareness people have of the activities of organizations in the field of fact-checking.

The next part of the programme was devoted to fact-checking, when Petr Gongala from the Demagog.cz project, in connection with the upcoming European elections, spoke about the most frequently occurring disinformation narratives related to the European Union.

The two-hour workshop concluded with a discussion with the participants, which raised a number of related questions and provided ideas for further development of the presented tools, for example how to better engage and motivate users in using crowd-sourced fact-checking tools.